It provides various graph algorithms to run on Spark. GraphX: It is the graph computation engine or framework that allows processing graph data.Data Frame is the way to interact with Spark SQL. Spark SQL: It is the component that works on top of Spark core to run SQL queries on structured or semi-structured data.The live data is ingested into discrete units called batches which are executed on Spark Core. Spark Streaming: It is the component that works on live streaming data to provide real-time analytics.It provides a platform for a wide variety of applications such as scheduling, distributed task dispatching, in-memory processing and data referencing. Spark Core: It is the foundation of Spark application on which other components are directly dependent.Hadoop, Data Science, Statistics & others Spark Ecosystem Components Due to RDD, programming is easy as compared to Hadoop.

It uses RDDs (Resilient Distributed Dataset) to delegate workloads to individual nodes that support iterative applications.

It can run on Hadoop YARN (Yet Another Resource Negotiator), on Mesos, on EC2, on Kubernetes or using standalone cluster mode. It processes data from diverse data sources such as Hadoop Distributed File System (HDFS), Amazon’s S3 system, Apache Cassandra, MongoDB, Alluxio, Apache Hive. It performs in-memory processing which makes it more powerful and fast. Data scientists believe that Spark executes 100 times faster than MapReduce as it can cache data in memory whereas MapReduce works more by reading and writing on disks. It was developed to overcome the limitations in the MapReduce paradigm of Hadoop. It is a general-purpose cluster computing system that provides high-level APIs in Scala, Python, Java, and R.

#How to install spark in windows software

It is a data processing engine hosted at the vendor-independent Apache Software Foundation to work on large data sets or big data. – Change access permissions using winutils.exe: winutils.exe chmod 777 \tmp\hive.Spark is an open-source framework for running analytics applications. – Change directory to winutils\bin by executing: cd c\winutils\bin. – Create tmp directory containing hive subdirectory if it does not already exist as such its path becomes: c:\tmp\hive.

The next step is to change access permissions to c:\tmp\hive directory using winutils.exe. HiveContext is a specialized SQLContext to work with Hive in Spark. Apache Hive is a data warehouse software meant for analyzing and querying large datasets, which are principally stored on Hadoop Files using SQL-like queries. Spark SQL supports Apache Hive using HiveContext. Create a directory winutils with subdirectory bin and copy downloaded winutils.exe into it such that its path becomes: c:\winutils\bin\winutils.exe.

#How to install spark in windows windows

This can be fixed by adding a dummy Hadoop installation that tricks Windows to believe that Hadoop is actually installed.ĭownload Hadoop 2.7 winutils.exe. Even if you are not working with Hadoop (or only using Spark for local development), Windows still needs Hadoop to initialize “Hive” context, otherwise Java will throw java.io.IOException. Spark uses Hadoop internally for file system access. To achieve this, open log4j.properties in an editor and replace ‘INFO’ by ‘ERROR’ on line number 19. It is advised to change log level for log4j from ‘INFO’ to ‘ERROR’ to avoid unnecessary console clutter in spark-shell. (If you have pre-installed Python 2.7 version, it may conflict with the new installations by the development environment for python 3).įollow the installation wizard to complete the installation. )ĭownload your system compatible version 2.1.9 for Windows from Enthought Canopy. ( You can also go by installing Python 3 manually and setting up environment variables for your installation if you do not prefer using a development environment. If you are already using one, as long as it is Python 3 or higher development environment, you are covered. Install Python Development EnvironmentĮnthought canopy is one of the Python Development Environments just like Anaconda. – Ensure Python 2.7 is not pre-installed independently if you are using a Python 3 Development Environment. – Apache Spark version 2.4.0 has a reported inherent bug that makes Spark incompatible for Windows as it breaks worker.py.

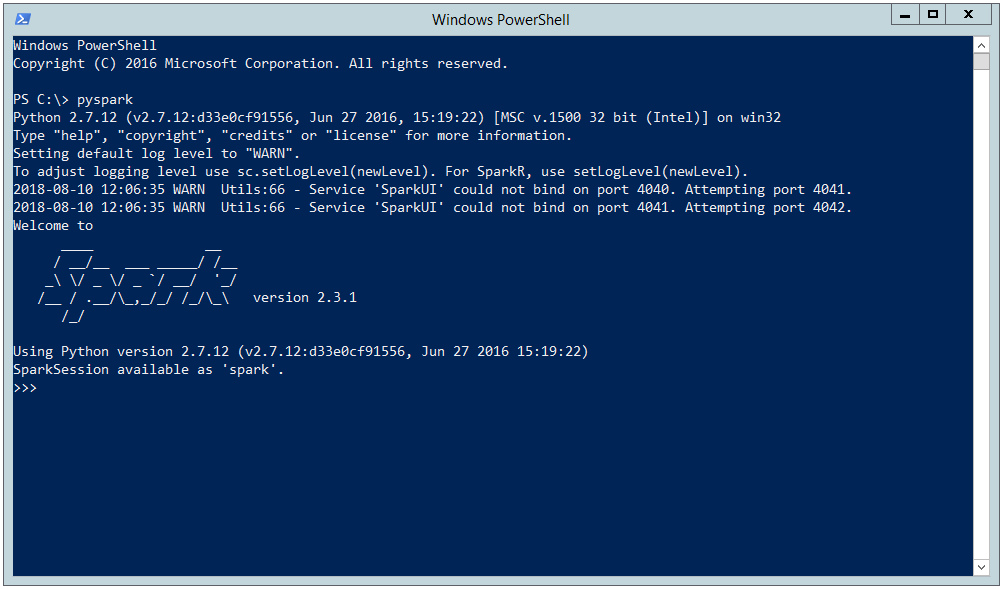

Please ensure that you install JAVA 8 to avoid encountering installation errors. Pointers for smooth installation: – As of writing of this blog, Spark is not compatible with Java version>=9. In this tutorial, we will set up Spark with Python Development Environment by making use of Spark Python API (PySpark) which exposes the Spark programming model to Python. Spark supports a number of programming languages including Java, Python, Scala, and R.

0 kommentar(er)

0 kommentar(er)